I was initially tempted to repurpose a lot of the content I wrote for Mode’s announcement blog post to generate this case study. And to be fair, there are a lot of nuggets o’ wisdom in there too! But in the wise words of Julie Andrews, let’s start at the very beginning, a very good place to start.

AI Assist didn’t stem from user research, though it was certainly built for, iterated on, and shaped by our customers. AI Assist was born during an internal company hackathon event in June of 2023. Side note, if you ever have a few hours to waste, please dare to ask me how I feel about Mode’s hackathon events. Spoiler alert, I’m a pretty big fan. That said, I wasn’t sure how I felt going into this one as we’d been prescribed a subject area to focus on—artificial intelligence.

Ultimately, I joined a group of engineers who were particularly excited about experimenting with LLMs and text-to-SQL workflows. The 48 hours that followed were nothing short of a blur. One of the benefits to having to produce results in such a ridiculously short timeframe is that it forces you to set constraints, follow your gut instincts, and just try the damn thing.

We applied numerous constraints to finish, of course, but I’d argue that the most impactful constraint was the groups’ early decision to focus the solution on our core, analyst audience. Now at first glance, this may not seem like a revolutionary or controversial constraint, but at the time we were in the midst of a multi-year effort to try and expand Mode’s feature set to better support folks beyond the analytics team (more on that in a different case study). And we could’ve just as easily argued that it made more sense to build a SQL generator for, well, non-SQL writers. But instead we followed our instincts, and harkened back to Mode’s OG mantra, “by analysts, for analysts”.

Once we made the decision to hone in on SQL writers, assumptions and focus areas quickly fell into place. One major assumption we made was that analysts would already have a solid understanding of how their data was modeled and organized. This allowed us to punt on trying to solve for really vague, open-ended requests that would require AI to conduct both broad, expensive data interpretation and discovery work while also translating text to SQL. Instead, we decided to laser focus on the translation step and require the user to provide at least one table name to give context on the data they wanted to query. This made the resulting workflow much, much simpler.

Our goal? Help analysts (at all experience levels) write SQL more efficiently by letting them mix English phrases into their SQL. With the click of a button, users could then leverage AI to translate their natural language comments into valid syntax, at which point we would run the completed query and surface the generated results.

With this plan in motion, I quickly got to work on the designs needed to make it happen.

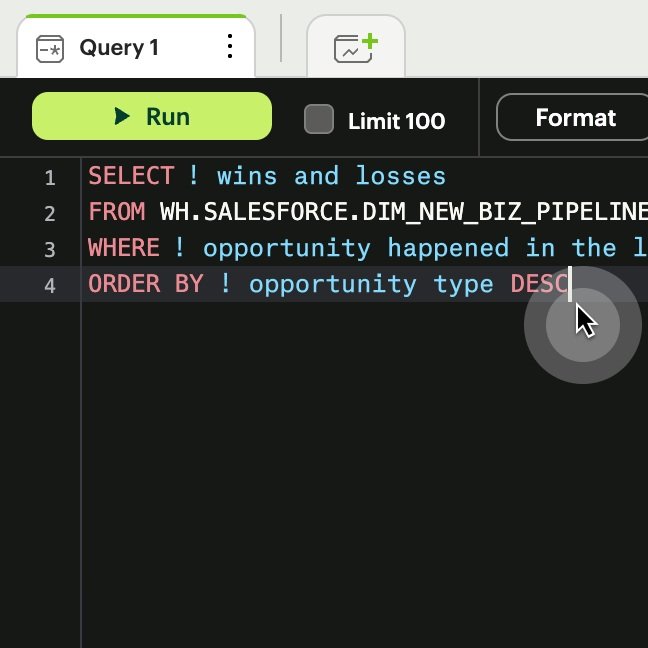

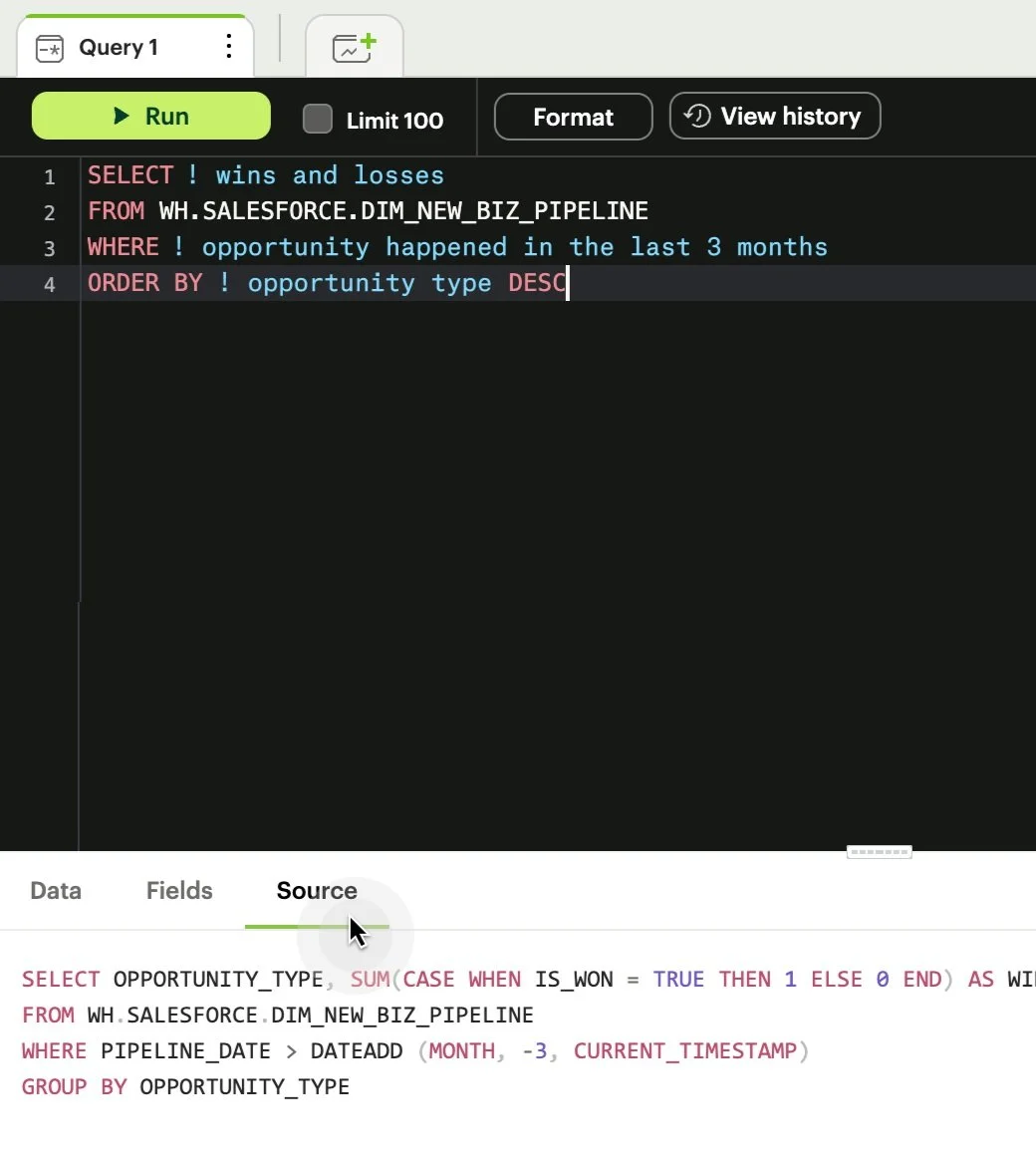

Context switcher to engage this

new mode of query writing

Custom syntax highlighting to differentiate english from SQL, initiated by the bang symbol (!)

Source tab so user could quickly review the AI-generated syntax that was executed and compare it with their original edits

When we finished we had managed to get both a working demo and a short hype video prepared to really sell the dream. We submitted the finished project under the codename FlexQL, as we were especially drawn to the flexibility offered by this “psuedo code” approach. Thankfully, we weren’t the only ones intrigued by the idea, as the entry earned us the two most esteemed Hack Day awards 🏆 — Best in Show and Hacker’s Choice.

It hadn’t occurred to me to do it this way, but in hindsight it seems almost obvious, our cofounder said

Validation and iteration

Hack Day left us with a lot of internal momentum, and shortly after the news broke that we were being acquired by ThoughtSpot, a well known BI player in the data space who was ahead of the game when it came to AI functionality. But despite growing momentum to ride the generative AI wave, stakeholders still weren’t entirely convinced that FlexQL would resonate with our customers (specifically more advanced analysts) and worried there wasn’t a clear path to evolving the UX.

In terms of inspiration, the market had a few options I could look to. There were code-complete solutions for software developers, and conversational chatbots for practically everyone else. But our workflow and idea didn’t really fall neatly into either camp, hence the stakeholder nerves.

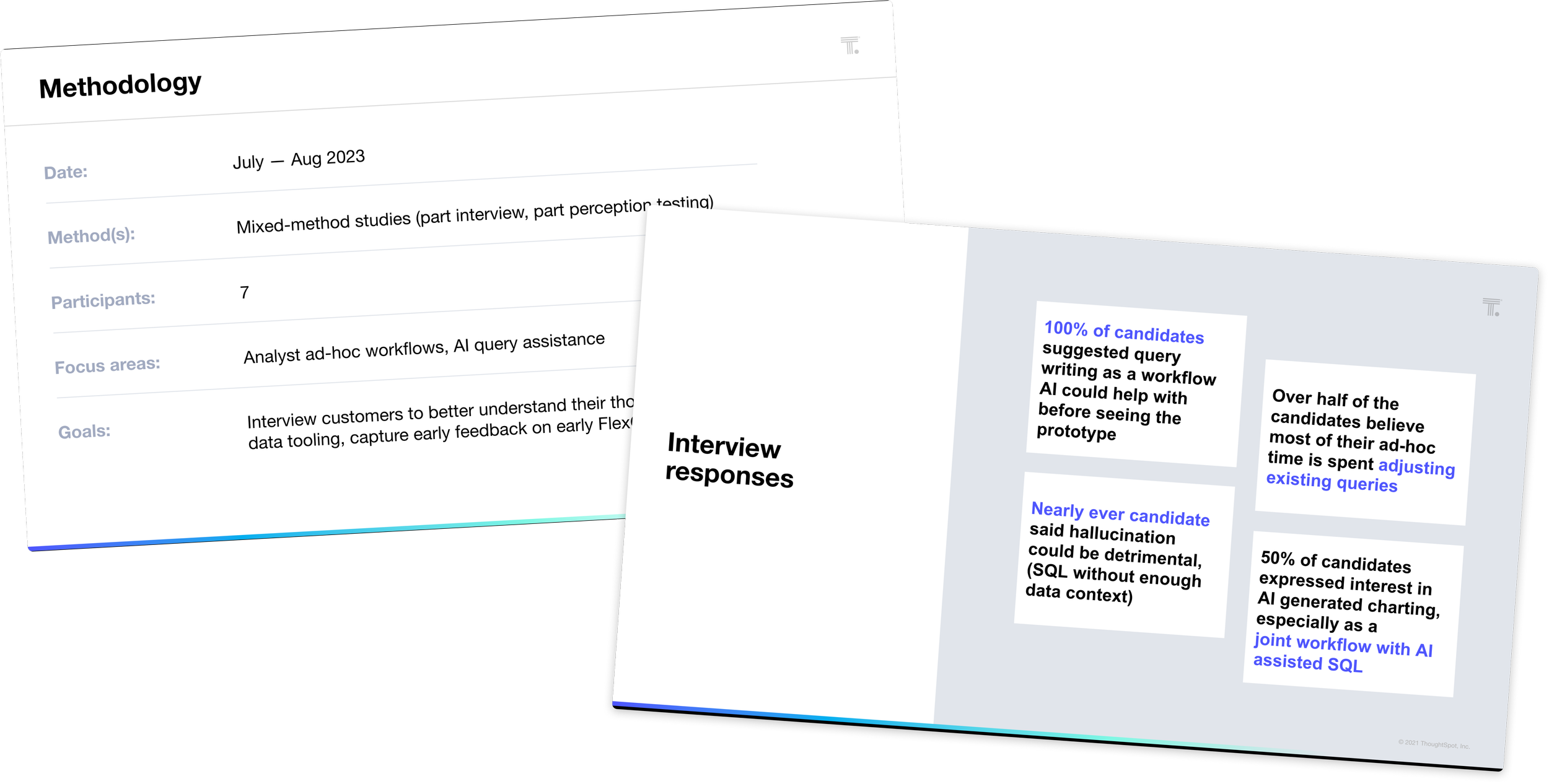

To de-risk the project, I conducted customer research to better understand how analysts were working with ChatGPT already, and how they felt AI could make their jobs easier. The thing we heard every analyst say was they were spending the majority of their time in Mode on edits or revisions to the same, or similar, queries. If they could lean on AI to expedite these SQL edits, or even encourage others to attempt them on their own, they could spend more of their time on impactful work and be able to respond to even more questions, unlocking additional insights.

In addition to validating the problem, I also used these sessions to help evolve the prototype and solidify the UX, specifically the mental model and role that natural language would play within our query editor.

Customer reactions to seeing the hack day prototype demo

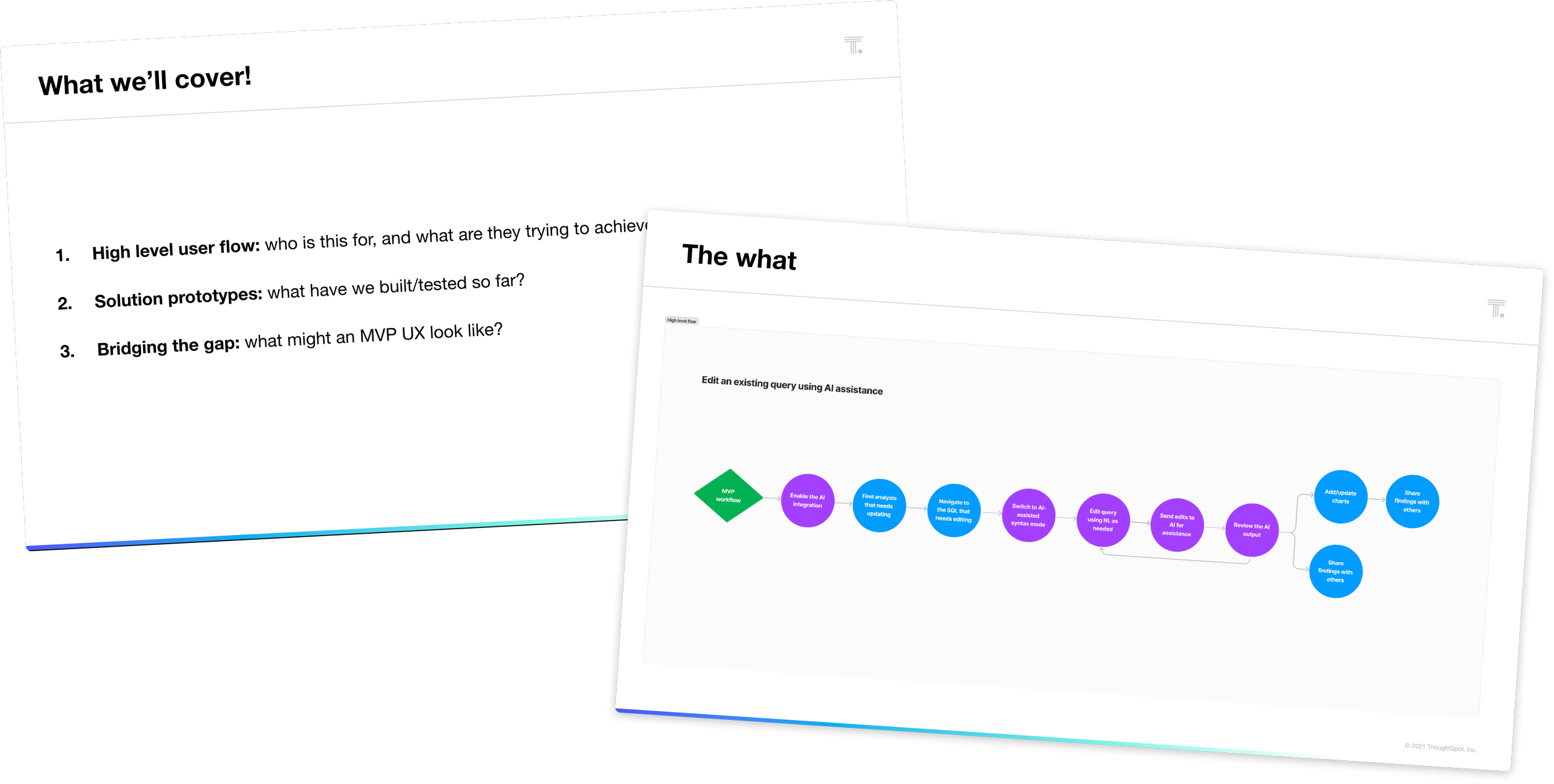

After getting initial reactions to our FlexQL proof of concept, I closed each session by sharing three design prototypes that teased a different approach to leveraging AI. Each had a shared goal of helping analysts preview and iterate through AI generated SQL more quickly:

AI as on-demand code snippets: in this model, english phrases could act like a variable name or facade for more complex statements that AI would translate behind the scenes. The phrases could potentially remain in your SQL queries indefinitely, allowing less technical users to parse the logic more easily

AI as a collaborative reviewer: this iteration allowed analysts to traverse through AI-code generations as if they were suggested edits in a Google Doc. Though I anticipated this design would feel overwhelming, I was interested in whether users might want to give and receive feedback from AI as if it were another analyst

AI as a side-by-side coding environment: this approach took the most inspiration from code editors, and allowed users to quickly compare the differences between their original edits and any AI generated SQL, before consciously accepting and inserting the updates in place of their own

Ultimately, customers resonated most with the third approach. Analysts felt it gave them a clear path to review and validate any AI generated SQL, well doubling as a teaching tool for their more novice users.

Option 1: AI as on-demand code snippets

Option 2: AI as a collaborative reviewer

Option 3: AI as a side-by-side coding environment

Alignment and sequencing

Shortly after we presented the research findings, the project was officially green-lit and a team was staffed. We were also given a rather ambitious deadline to try and soft launch the MVP by, and settled on a safer feature name we could go to market with (AI Assist).

Despite Nic’s early partnership, this was my first initiative that didn’t have a dedicated PM.

As a result, I got to take on a lot of product management aspects of the project. I led regular working sessions to help align the team on the research findings and discuss what we ought to prioritize before the initial launch.

I also got to dive head first into the world of prompt engineering, as we figured out how exactly to leverage OpenAI’s API to generate SQL, and what meta-data we’d need to send along. Though I have the privilege of working closely with engineering on typical projects, I got to pair even more closely than I normally would, allowing us to work that much more iteratively and collaboratively. 100/10 experience, would do again.

Design explorations

We didn’t have much time to iterate on the MVP after the soft launch goal was announced, but I did explore a handful of UI and copy iterations with the design team. I also dogfooded the heck out of this thing.

A few weeks out ahead of the soft launch, we were able to conduct our first usability tests with customers. This led to a few enhancements and prompt fixes before launch, helped us create valuable customer facing demos and onboarding materials, and enabled us to document more concrete use cases after seeing analysts use it in the wild.

The final product included updated syntax highlighting that built on the bang symbol but leveraged the -- of traditional SQL comments (--!) , a side-by-side interface for users to quickly generate AI output and compare it alongside their edits, a few handy keyboard shortcuts, and 👍🏼 👎🏼 feedback mechanisms for customers to score a given AI generation (with the opportunity to expand after scoring a general as “unhelpful”). These scores gave us training data to work with as we updated the model over time.

After some initial feedback from soft launch customers, we prioritized a few more enhancements and “hard launched” at the end of January. Given the sensitive nature of data access and security, AI Assist is available to customers on an opt-in, per-request basis (either at the user/group level or for an entire Workspace).

Here are some early results after the first couple of months:

Number of organizations who’ve requested access: 100+

Total # of AI queries generated: ~2,000

Total # of users who’ve generated at least one AI query: 300+

Apprectiations

I learned a heck of a lot during this project. But I sure as hell didn’t do it alone. Benn Stancil once reminded me that it’s important to tell the world how great your colleagues are. So here is my unabbreviated appreciation post, that would otherwise be buried in a Slack channel:

To Nic Galluzzo, for the Hack Day question that started it all — “some kind of hybrid query approach?” and all of the initial PM partnership. Your customer-centric drive and passion to learn and iterate were so critical in helping us get off the ground, dive into the unknown, focus on the opportunities, and start putting our ideas in front of users.

To Jared Smith, Oliver Sanford, and everyone else who so willingly contributed their ideas, time, and effort to the Hack Day project that would eventually become AI Assist.

To Eddie Tejeda for all of the enthusiasm, Open AI learnings and research, and engineering leadership that helped us get the project officially staffed. And to leadership generally, for giving us the freedom to explore and pursue an idea that looked and felt a bit different than the AI chat clients that were becoming more prevalent by the day.

To Asha Hill, Jake Walker, Daniel Riquelme, Kian Zanganeh, Samuel Weick, Alfredo Santoyo, Neha Hystad, Megan Kard, Simran Gunsi, Jennifer Gieber, and everyone else who participated in the earliest phases of (rough) internal testing and rapid design workshopping. Asha Hill was critical in providing a much needed analyst voice, and helped us practice with and consider use cases that would resonate with analysts.

To Paul Thurlow, for evolving the Hack Day prototype into something we could think about enabling for customers. For continuing to iterate on his own, for always poking the right holes, and for challenging us to look at things in new ways. I so look forward to the day that we can start revisiting AI SQL autocomplete concepts.

To Divya Ranganathan Gud, for stepping in as Engineering Manager and doing an incredible job of keeping us focussed and motivated. When she communicated the deadline to the team, she did so in a way that felt less like a requirement and more like an opportunity...something to work towards. Your perspective was contagious, and it pushed us in all the right ways.

To Sara V, for supporting the team in so many different ways, many of which often go unnoticed. Thank you for helping us quickly work through challenges, digging into all of the security and privacy aspects of this project, and for unblocking any blockers to make us release ready. Thank you for always finding the time, despite everything else you have on your plate, to help me craft customer email replies and pair on a sample query for over an hour, just to make sure our examples and hype video are, in fact, hype.

To Stephen Ajetomobi, for jumping on to support the BE aspects of this project and for ramping and contributing so quickly. Hope to work with you again!

To Ravi Patel and Garrett Griffith— my front end eng partners in crime! Not only did y’all go above and beyond in implementing the interface and workflows, you brought so many new ideas to the table, always considered ways to make things better even within tight timelines, and brought such incredible amounts of energy and enthusiasm to every meeting and DM. The pride and care that y’all demonstrated for this feature resonates in every single interaction. Thank you.

To Zac Farrell, our BE backbone and prompt pioneer! It’s been an absolute joy getting to design and collaborate with you. Thank you for navigating through so much unfiltered feedback with such grace, for talking through all-the-things, and for pushing us to experiment and make changes when things weren’t working as well as they could have been. AI and prompt engineering is new territory for so many of us, but you always rose to the occasion and worked to up-level us all.

And finally, to everyone on the GTM side that matched the team’s energy to bring this to the market. To Christine Sotelo, for whipping up a release plan in record time and jumping in to give feedback on all things comms. To Jennifer Spoelma, for ramping on education and building out Intercoms to ensure even our earliest customers would be properly trained from day one, and to Mia Muench and Juliette Maymin for publishing the new AI Assist help docs. The lengths Juliette went through to make sure the page looked perfect, to get the changes approved in time, and to communicate her progress along the way were truly remarkable. Witnessing it felt like watching the final leg of a relay race, as y’all picked up the baton and ran.